In the digital marketing era, we have seen an exponential increase in the amount of data generated, stored and analysed from an ever-growing plethora of systems. But, it is not only the complexity of the data and algorithmic landscape that has increased dramatically. Also, the landscape of activation systems itself has become more advanced, and with this technical advancement, the possibilities of how you can communicate with your customer have increased dramatically.

Still, with all this technological advancement, how come that companies still fail to deliver value from the analytical and AI Projects?

“Most analytics and AI projects fail because operationalisation is only addressed as an afterthought. The top barrier to scaling analytics and AI implementations is complexity around integrating the solution with existing enterprise applications and infrastructure.“ – Gartner 2021

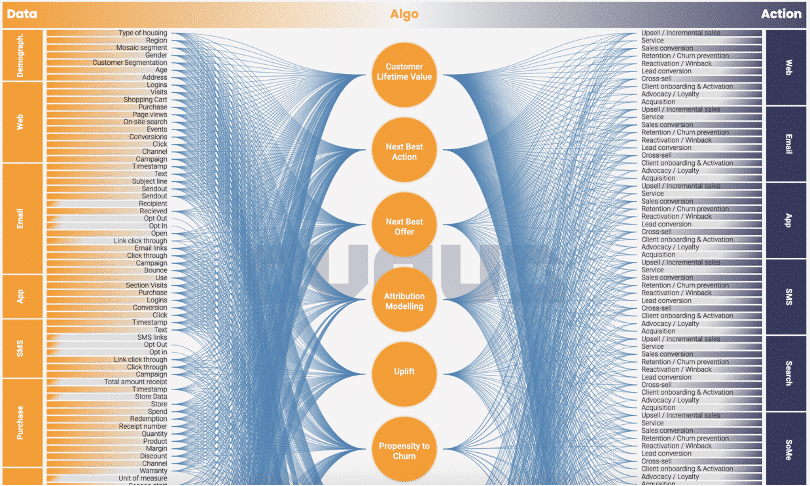

Exhibit 1: Demonstration of the complexity of the data x algo x action landscape

In software engineering, operations is an umbrella term for managing, monitoring and deploying production applications. Similarly in the fields of data and analytics, operations is the process of how to manage, monitor and deploy algorithms so that predictions are delivered to an end system where action can be taken and business results can be created.

Manual operations for a machine learning model would correspond to a data scientist creating data sets on a laptop, training a model, then at a later stage running the model on a new dataset to get predictions, saving the predictions as a CSV-file and emailing the file to someone who can import the file in, as an example, an email system. A fully automated operations pipeline for machine learning would handle all of the mentioned tasks automatically and also decide on when it is needed to retrain a model instead of using a previously trained one.

The Operations Swamp

Unless the operationalisation of data and analytics is addressed proactively, your analytical organisation is destined to get stuck in the operations swamp. Some organisations can have sunk so deep into the swamp, that they cannot even realise they are in one. Some of the key signs that you are in such a swamp include:

- You are always thinking of operations as a future problem

- Your best data science and engineering talent are stuck with operational tasks leaving them little to zero time on new development

- You have a hard time retaining your data science talent

- You scale your operations through recruiting people and not by increasing automation

- You create lots of algorithms but none of them ends up getting used.

Investing in proper tools and frameworks for data and analytical operations will enable you to scale up without having to recruit, resulting in being able to produce 10 algorithms per data scientist instead of needing 5 data scientists to deliver the same value. This investment will also enable your data scientists to spend their time on creative and experimental tasks instead of manual operations which will significantly improve your employee attrition. The economical argument alone makes sense for any organisation that is or is thinking about, investing in analytics.

Gartner recommends:

- “Invest up front in upskilling teams and upgrading the technology stack across compute infrastructure, DevOps toolchains and adjacent operationalisation areas to increase the success rate of AI initiatives and value creation.

- Utilise the best practices of DataOps and ModelOps in symphony to reduce friction between various machine learning (ML) and AI artifacts, and to reduce friction between the teams associated with them.

- Establish a strong DevOps practice across the various stages—data pipeline, data science, ML, AI and underlying infrastructure — to radically improve the delivery pipeline and operationalise analytics and AI architectures.

- Create an integrated XOps practice that blends disparate functions, teams and processes to support data processing, model training, model management and model monitoring, allowing for continuous delivery of AI-based systems.

- Use DataOps, ModelOps and DevOps practices and expertise to build composable technical architecture providing resilience, modularity and autonomy to business functions, and orchestrating the delivery of analytics and AI solutions.”

For us, It is evident that Gartner, with good reason, puts a lot of emphasis on operations as a key success factor for analytical and AI initiatives. However, what Gartner has left out is a detailing of what the different best practices are within the DevOps, DataOps and ModelOps. To give you a more concrete picture of what the different XOps practices mean, we have with our experience from the past 10+ years in the industry, identified 5 key frameworks for accelerating the operationalisation of algorithms.

The solution: Top 5 key frameworks for an accelerated operationalisation of algorithms

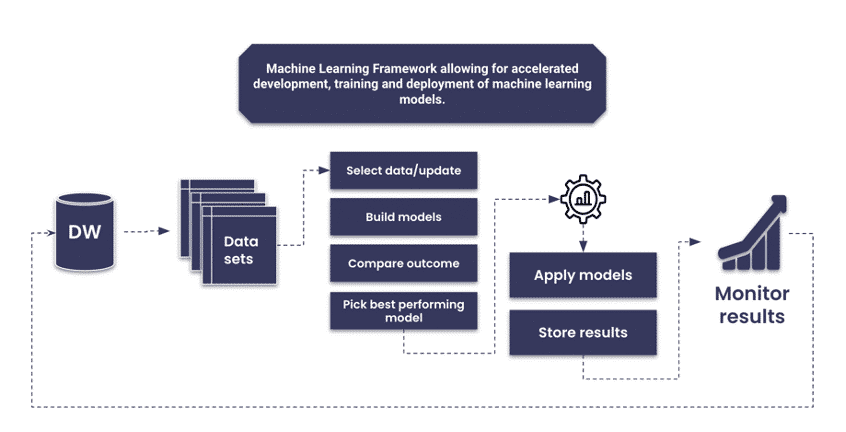

- ML Framework: A Machine Learning framework allows for more accelerated development of machine learning models. A good ML framework should handle all steps in the chain of building a model. It should automatically create new training datasets, it should train lots of different models and compare them on a validation set to find the best candidate and it should be able to deploy the new model so that it is updated in every place where it is used. The framework should also version control models and be able to monitor results over time to determine when it is suitable to retrain the model automatically.

Exhibit 2: Machine Learning Framework

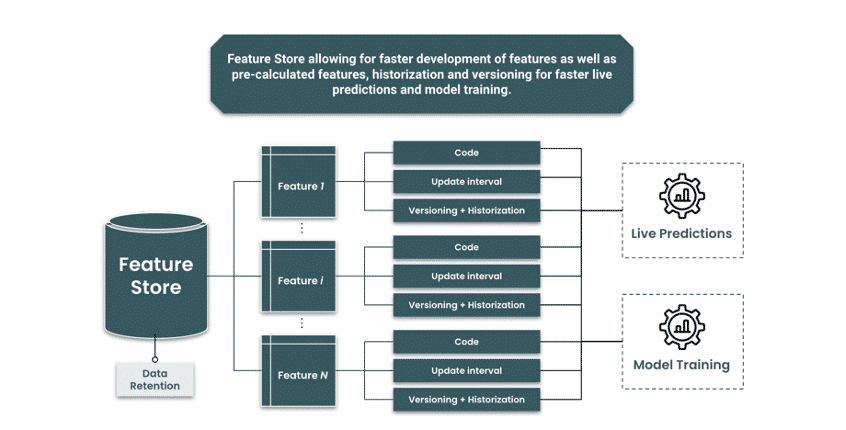

- Feature Store: A Feature Store allows for faster development of machine learning features. By storing all calculations of features (hence the name feature store), most of the features will be pre-calculated when they are needed, allowing for a much faster serving time. This will in turn enable the data scientists to pick and choose features for their development of models, on the fly, instead of waiting hours or days for a data set to be calculated, resulting in the data scientist being more creative. Also, the end-user of the feature store should not have to be concerned whether the feature should be used for training a model, for batch prediction or for online prediction.

Exhibit 3: Top 5 key frameworks for an accelerated operationalisation of algorithms – Feature Store

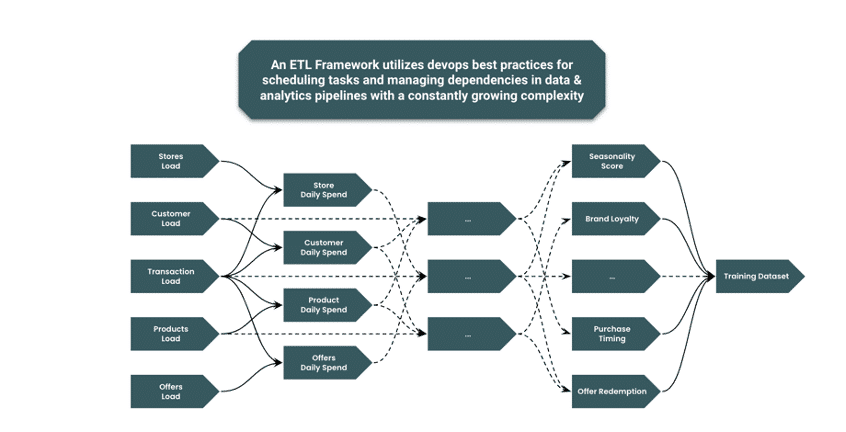

- ETL Framework: An ETL Framework utilises DevOps best practices for scheduling tasks and managing dependencies in data & analytics pipelines with a constantly growing complexity. Without a proper ETL framework, the duplication of code will quickly turn your analytical unit into a gang of swamp dwellers. A good framework will also provide good functionality for testing and verifying new code changes, creating trust in releasing new code and lowering the threshold for complex but beneficial changes.

Exhibit 4: Top 5 key frameworks for an accelerated operationalisation of algorithms – ETL Framework

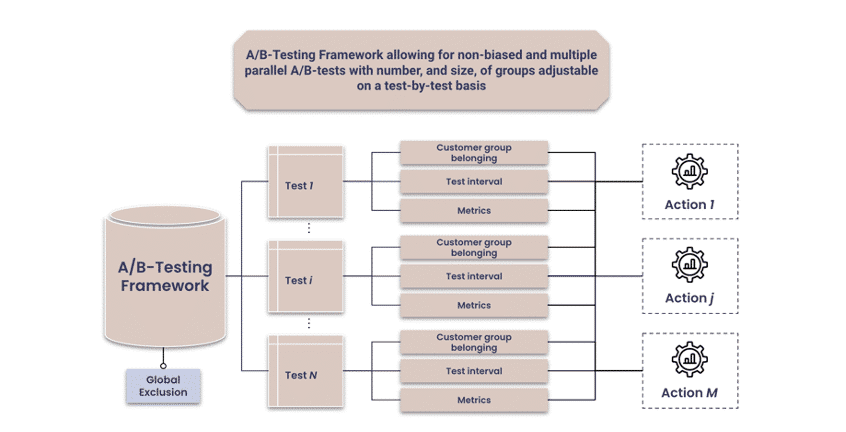

- A/B-Testing Framework: An A/B-Testing Framework is essential for any organisation that wants to measure the results of their actions. This framework allows for non-biased and multiple parallel A/B-tests where the number, and size, of groups, are adjustable on a test-by-test basis. It also follows up on tests, via pre-defined metrics, automatically when the specified test period has ended.

Exhibit 6: Top 5 key frameworks for an accelerated operationalisation of algorithms – A/B Testing Framework

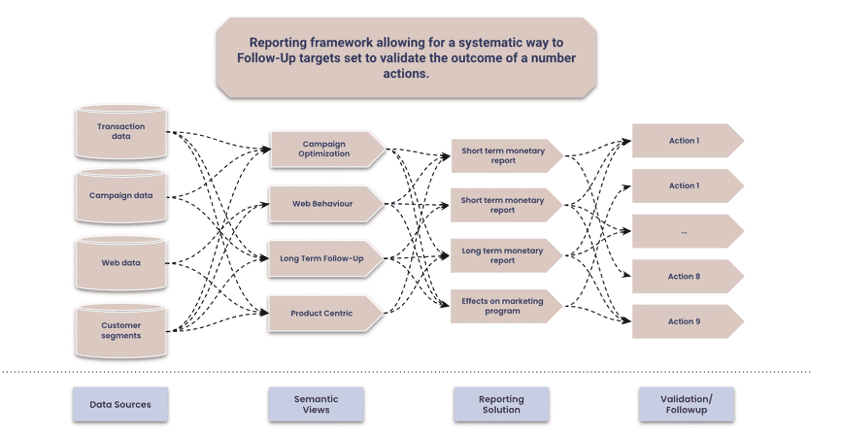

- Reporting Framework: A Reporting Framework allows for a systematic way to follow up on targets via standardised business metrics specific to your industry. The reporting framework is best utilised together with your existing BI-tool for visualisation and machine learning- and the A/B-testing framework for automatic follow up on tests and model performance. A good reporting framework also utilises augmented analytics in order to describe why a metric has changed, and not only that it has.

Exhibit 7: Top 5 key frameworks for an accelerated operationalisation of algorithms – Reporting framework

“Consistently measuring and reporting on the business value of analytics and AI assets remains a challenge. This requires monitoring of analytics and AI assets throughout their life cycle, not only from their development stages to their production environments, but also through the various stages of their integration.” – Gartner 2021

In summary, operations for data & analytics is not a can that should be kicked down the road. If your organisation wants a competitive advantage by utilising analytics and AI, investing in operations and the necessary tools is a necessity in order to increase your market position.

If you want to learn more about this topic, watch the Avaus Expert Talks 4: Algo Operations or reach out to anyone at Avaus.

Watch AET 4: Algo Operations

Source: Gartner, Top Trends in Data and Analytics for 2021: XOps, Erick Brethenoux, Donald Feinberg, Afraz Jaffri, Ankush Jain, Soyeb Barot, Rita Sallam, 16 February 2021

Contact us