Building a good Machine Learning model is no longer the hard part. Turning it into something production-ready and maintainable is. Developing models and operationalizing them are two very different challenges, and without a shared framework, even strong models can become unscalable, untraceable, and fragile.

Most data scientists today can wrangle a dataset, train something decent, and even deploy it as an API or a batch job with some help from engineering.

But what happens after that? What happens when you’ve built a few good models, and now there are ten more on the roadmap?

Suddenly you’re asking questions that have nothing to do with model accuracy:

- How do we version control and code review these pipelines?

- How do we re-use existing logic, rather than rebuild every time?

- How do we automate deployment and avoid manual handovers?

- What structure do we share so that our models behave like real software?

This blog post is about that moment. Not when you’re building your first model, but when it’s clear that scaling Machine Learning requires a production framework.

Why scaling Machine Learning gets messy, fast

A single model is easy to manage. A handful starts to get annoying. But once you’re dealing with multiple teams, multiple use cases, and multiple models, things can spiral fast.

Here’s what tends to happen:

- Everyone writes their own data cleaning scripts, often differently for the same data.

- Teams often duplicate or hardcode feature engineering for each use case.

- There’s no way to trace which version of a model is live, or what changed.

- No model monitoring, and performance drops often go unnoticed.

- Models are never retrained, and feature drift reduce model performance.

- Files like erics_model_final_v2.ipynb become your production code.

We’ve seen this happen firsthand. One client needed over three months to get their first model into production, not because the model was complex, but because they had to build everything around it from scratch. No shared pipelines, no automation, no way to plug into the rest of the system.

Compare that with another case, where we had the right structure in place.

There, we defined a model in the morning and had it deployed, properly versioned, integrated and monitored, the same day before lunch.

Same data scientists. Same algorithms. Totally different outcome.

A framework for building and running Machine Learning models

So how do you go from the mess above to a setup where Machine Learning can scale?

You need a framework. Not a tool. Not a product. A shared way of working turns model development into a process teams can repeat, test, maintain, and improve.

A good Machine Learning framework covers the full lifecycle:

- Data ingestion & cleaning

- Structured, reusable code that wraps common cleaning steps. Not every notebook doing its own thing.

- Feature engineering

- Feature creation becomes modular, testable, and versioned.

- Model training & selection

- Model selection depends on validation performance, not instinct or coding order.

- Model versioning

- Models cannot be deployed without a version, as model deployment follows standard software development practices, including version control, code review, and CI/CD pipelines.

- Deployment

- Deployment follows the same process as other mature DevOps setups, or MLOps as it’s called when dealing with ML models. It is automated using GitLab Runners, GitHub Actions, or similar tools.

- Monitoring and feedback

- Performance is tracked. Feedback is collected. Retraining happens on a schedule, or when the data tells you it should.

It’s not about over-engineering. It’s about building fast without creating chaos.

This process also lets you do things like test a new model on only a subset of your data or users, rather than flipping everything over at once. That’s crucial for faster iteration and lower risk.

What it looks like in practice

Here’s an example setup we’ve seen succeed in an actual production:

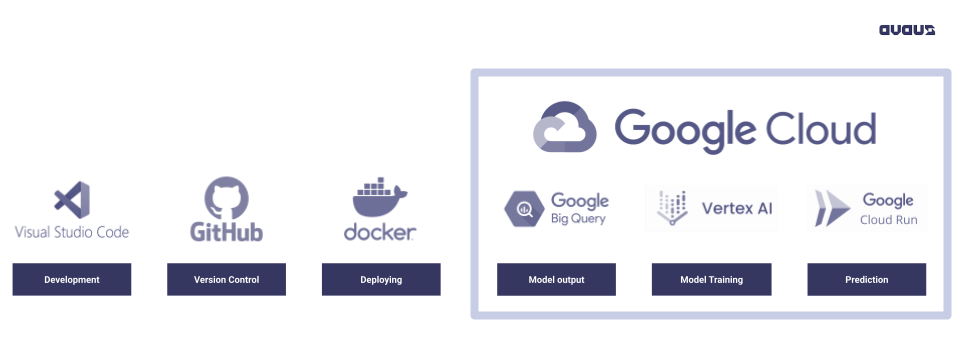

(End-to-end Machine Learning pipeline with VSCode, GitHub, Docker, and Google Cloud tools)

- Code in VSCode, versioned in GitHub with pull requests and reviews

- Pipelines in Docker, so they’re portable and environment-agnostic

- GCP for infrastructure, using:

- BigQuery for data access

- Vertex AI for training and tracking models

- Cloud Run to deploy model scoring APIs or batch processes (which can run on GPUs or TPUs)

This stack isn’t unique to us. It’s just one example of how to glue together modern tooling into a setup that feels cohesive and scalable, where everything works the same way every time.

Algocomponents

For those working with Avaus, you get access to our internal tooling, especially a Python library called Algocomponents. It wraps SQL pipelines, handles configs, and helps structure complex workflows. While optional, it helps teams accelerate development.

The full documentation for algocomponents is available here: https://algocomponents.avaus.com/

Read more about the Avaus components library:

https://www.avaus.com/news/how-do-we-minimise-your-time-spent-achieving-your-data-driven-vision/

Good models are not enough

Building a good model isn’t the hard part anymore. That’s the easy part.

The hard part is building everything around the Machine Learning model, the parts that turn an experiment into a production framework that delivers continuous value and can be maintained by others.

We’ve seen how powerful it is when teams adopt a shared Machine Learning production framework. It enables faster development, easier debugging, and a feedback loop that actually works. It makes model development feel like software development, because at scale that’s what it needs to be.

So if your models are starting to multiply, or if you’re tired of running into the same problems every time you start a new project, it might be time to step back and ask:

What’s the framework you’re building on? Feel free to reach out!